Master Databricks-Certified-Data-Engineer-Associate Exam with Reliable Practice Questions

Which of the following statements regarding the relationship between Silver tables and Bronze tables is always true?

Start a Discussions

A data analyst has developed a query that runs against Delta table. They want help from the data engineering team to implement a series of tests to ensure the data returned by the query is clean. However, the data engineering team uses Python for its tests rather than SQL.

Which of the following operations could the data engineering team use to run the query and operate with the results in PySpark?

Start a Discussions

Which of the following describes a scenario in which a data engineer will want to use a single-node cluster?

Correct : A

1:Single Node clusters | Databricks on AWS

2:Autoscaling | Databricks on AWS

3:SQL Endpoints | Databricks on AWS

4:Databricks Lakehouse Platform | Databricks on AWS

: [Spark UI | Databricks on AWS]

Start a Discussions

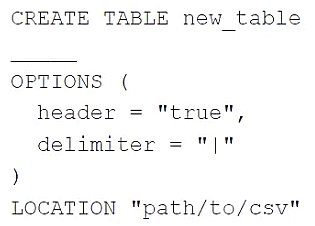

A data engineer needs to create a table in Databricks using data from a CSV file at location /path/to/csv.

They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

Start a Discussions

A data engineer wants to create a new table containing the names of customers who live in France.

They have written the following command:

CREATE TABLE customersInFrance

_____ AS

SELECT id,

firstName,

lastName

FROM customerLocations

WHERE country = 'FRANCE';

A senior data engineer mentions that it is organization policy to include a table property indicating that the new table includes personally identifiable information (Pll).

Which line of code fills in the above blank to successfully complete the task?

Correct : D

To include a property indicating that a table contains personally identifiable information (PII), the TBLPROPERTIES keyword is used in SQL to add metadata to a table. The correct syntax to define a table property for PII is as follows:

CREATE TABLE customersInFrance

USING DELTA

TBLPROPERTIES ('PII' = 'true')

AS

SELECT id,

firstName,

lastName

FROM customerLocations

WHERE country = 'FRANCE';

The TBLPROPERTIES ('PII' = 'true') line correctly sets a table property that tags the table as containing personally identifiable information. This is in accordance with organizational policies for handling sensitive information.

Reference: Databricks documentation on Delta Lake: Delta Lake on Databricks

Start a Discussions