Master Amazon SOA-C02 Exam with Reliable Practice Questions

The company requires a disaster recovery solution for an Aurora PostgreSQL database with a 20-second RPO.

Options:

Correct : A

Aurora Global Databases are designed for cross-Region disaster recovery with very low RPO, meeting the 20-second requirement. Setting up Aurora as a global database with the correct configuration ensures low-latency replication and rapid failover, making it ideal for compliance with strict disaster recovery requirements.

Topic 2, Simulation

Start a Discussions

SIMULATION

If your AWS Management Console browser does not show that you are logged in to an AWS account, close the browser and relaunch the

console by using the AWS Management Console shortcut from the VM desktop.

If the copy-paste functionality is not working in your environment, refer to the instructions file on the VM desktop and use Ctrl+C, Ctrl+V or Command-C , Command-V.

Configure Amazon EventBridge to meet the following requirements.

1. use the us-east-2 Region for all resources,

2. Unless specified below, use the default configuration settings.

3. Use your own resource naming unless a resource

name is specified below.

4. Ensure all Amazon EC2 events in the default event

bus are replayable for the past 90 days.

5. Create a rule named RunFunction to send the exact message every 1 5 minutes to an existing AWS Lambda function named LogEventFunction.

6. Create a rule named SpotWarning to send a notification to a new standard Amazon SNS topic named TopicEvents whenever an Amazon EC2

Spot Instance is interrupted. Do NOT create any topic subscriptions. The notification must match the following structure:

Input Path:

{''instance'' : ''$.detail.instance-id''}

Input template:

'' The EC2 Spot Instance

Correct : A

Here are the steps to configure Amazon EventBridge to meet the above requirements:

Log in to the AWS Management Console by using the AWS Management Console shortcut from the VM desktop. Make sure that you are logged in to the desired AWS account.

Go to the EventBridge service in the us-east-2 Region.

In the EventBridge service, navigate to the 'Event buses' page.

Click on the 'Create event bus' button.

Give a name to your event bus, and select 'default' as the event source type.

Navigate to 'Rules' page and create a new rule named 'RunFunction'

In the 'Event pattern' section, select 'Schedule' as the event source and set the schedule to run every 15 minutes.

In the 'Actions' section, select 'Send to Lambda' and choose the existing AWS Lambda function named 'LogEventFunction'

Create another rule named 'SpotWarning'

In the 'Event pattern' section, select 'EC2' as the event source, and filter the events on 'EC2 Spot Instance interruption'

In the 'Actions' section, select 'Send to SNS topic' and create a new standard Amazon SNS topic named 'TopicEvents'

In the 'Input Transformer' section, set the Input Path to {''instance'' : ''$.detail.instance-id''} and Input template to ''The EC2 Spot Instance <instance> has been interrupted on account.

Now all Amazon EC2 events in the default event bus will be replayable for past 90 days.

Note:

You can use the AWS Management Console, AWS CLI, or SDKs to create and manage EventBridge resources.

You can use CloudTrail event history to replay events from the past 90 days.

Start a Discussions

SIMULATION

A webpage is stored in an Amazon S3 bucket behind an Application Load Balancer (ALB). Configure the SS bucket to serve a static error page in the event of a failure at the primary site.

1. Use the us-east-2 Region for all resources.

2. Unless specified below, use the default configuration settings.

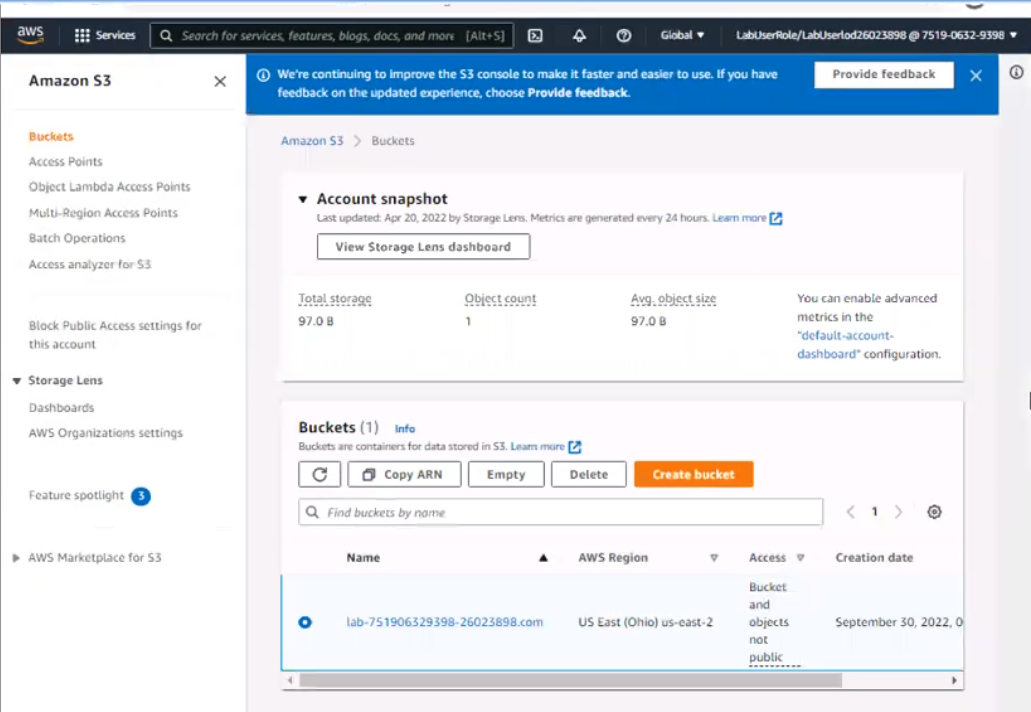

3. There is an existing hosted zone named lab-

751906329398-26023898.com that contains an A record with a simple routing policy that routes traffic to an existing ALB.

4. Configure the existing S3 bucket named lab-751906329398-26023898.com as a static hosted website using the object named index.html as the index document

5. For the index-html object, configure the S3 ACL to allow for public read access. Ensure public access to the S3 bucketjs allowed.

6. In Amazon Route 53, change the A record for domain lab-751906329398-26023898.com to a primary record for a failover routing policy. Configure the record so that it evaluates the health of the ALB to determine failover.

7. Create a new secondary failover alias record for the domain lab-751906329398-26023898.com that routes traffic to the existing 53 bucket.

Correct : A

Here are the steps to configure an Amazon S3 bucket to serve a static error page in the event of a failure at the primary site:

Log in to the AWS Management Console and navigate to the S3 service in the us-east-2 Region.

Find the existing S3 bucket named lab-751906329398-26023898.com and click on it.

In the 'Properties' tab, click on 'Static website hosting' and select 'Use this bucket to host a website'.

In 'Index Document' field, enter the name of the object that you want to use as the index document, in this case, 'index.html'

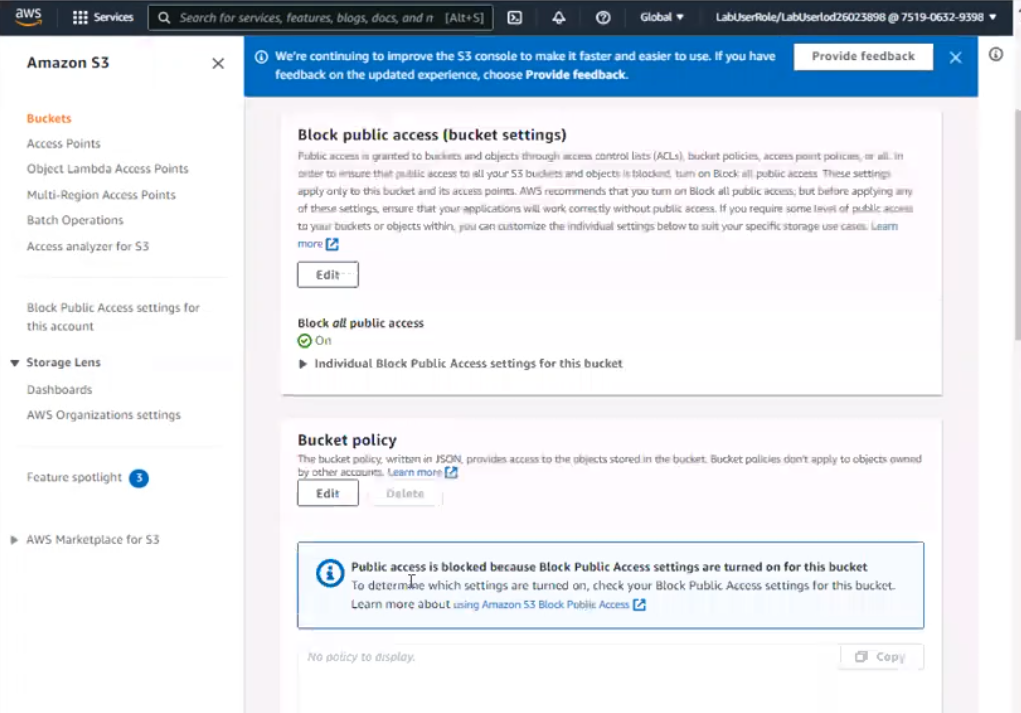

In the 'Permissions' tab, click on 'Block Public Access', and make sure that 'Block all public access' is turned OFF.

Click on 'Bucket Policy' and add the following policy to allow public read access:

{

'Version': '2012-10-17',

'Statement': [

{

'Sid': 'PublicReadGetObject',

'Effect': 'Allow',

'Principal': '*',

'Action': 's3:GetObject',

'Resource': 'arn:aws:s3:::lab-751906329398-26023898.com/*'

}

]

}

Now navigate to the Amazon Route 53 service, and find the existing hosted zone named lab-751906329398-26023898.com.

Click on the 'A record' and update the routing policy to 'Primary - Failover' and add the existing ALB as the primary record.

Click on 'Create Record' button and create a new secondary failover alias record for the domain lab-751906329398-26023898.com that routes traffic to the existing S3 bucket.

Now, when the primary site (ALB) goes down, traffic will be automatically routed to the S3 bucket serving the static error page.

Note:

You can use CloudWatch to monitor the health of your ALB.

You can use Amazon S3 to host a static website.

You can use Amazon Route 53 for routing traffic to different resources based on health checks.

You can refer to the AWS documentation for more information on how to configure and use these services:

https://aws.amazon.com/route53/

https://aws.amazon.com/cloudwatch/

Start a Discussions

SIMULATION

You need to update an existing AWS CloudFormation stack. If needed, a copy to the CloudFormation template is available in an Amazon SB bucket named cloudformation-bucket

1. Use the us-east-2 Region for all resources.

2. Unless specified below, use the default configuration settings.

3. update the Amazon EQ instance named Devinstance by making the following changes to the stack named 1700182:

a) Change the EC2 instance type to us-east-t2.nano.

b) Allow SSH to connect to the EC2 instance from the IP address range

192.168.100.0/30.

c) Replace the instance profile IAM role with IamRoleB.

4. Deploy the changes by updating the stack using the CFServiceR01e role.

5. Edit the stack options to prevent accidental deletion.

6. Using the output from the stack, enter the value of the Prodlnstanceld in the text box below:

Correct : A

Here are the steps to update an existing AWS CloudFormation stack:

Log in to the AWS Management Console and navigate to the CloudFormation service in the us-east-2 Region.

Find the existing stack named 1700182 and click on it.

Click on the 'Update' button.

Choose 'Replace current template' and upload the updated CloudFormation template from the Amazon S3 bucket named 'cloudformation-bucket'

In the 'Parameter' section, update the EC2 instance type to us-east-t2.nano and add the IP address range 192.168.100.0/30 for SSH access.

Replace the instance profile IAM role with IamRoleB.

In the 'Capabilities' section, check the checkbox for 'IAM Resources'

Choose the role CFServiceR01e and click on 'Update Stack'

Wait for the stack to be updated.

Once the update is complete, navigate to the stack and click on the 'Stack options' button, and select 'Prevent updates to prevent accidental deletion'

To get the value of the Prodlnstanceld , navigate to the 'Outputs' tab in the CloudFormation stack and find the key 'Prodlnstanceld'. The value corresponding to it is the value that you need to enter in the text box below.

Note:

You can use AWS CloudFormation to update an existing stack.

You can use the AWS CloudFormation service role to deploy updates.

Start a Discussions

A company hosts an application on an Amazon EC2 instance in a single AWS Region. The application requires support for non-HTTP TCP traffic and HTTP traffic.

The company wants to deliver content with low latency by leveraging the AWS network. The company also wants to implement an Auto Scaling group with an

Elastic Load Balancer.

How should a SysOps administrator meet these requirements?

Correct : D

AWS Global Accelerator and Amazon CloudFront are separate services that use the AWS global network and its edge locations around the world. CloudFront improves performance for both cacheable content (such as images and videos) and dynamic content (such as API acceleration and dynamic site delivery). Global Accelerator improves performance for a wide range of applications over TCP or UDP by proxying packets at the edge to applications running in one or more AWS Regions. Global Accelerator is a good fit for non-HTTP use cases, such as gaming (UDP), IoT (MQTT), or Voice over IP, as well as for HTTP use cases that specifically require static IP addresses or deterministic, fast regional failover. Both services integrate with AWS Shield for DDoS protection.

Start a Discussions